10 min read

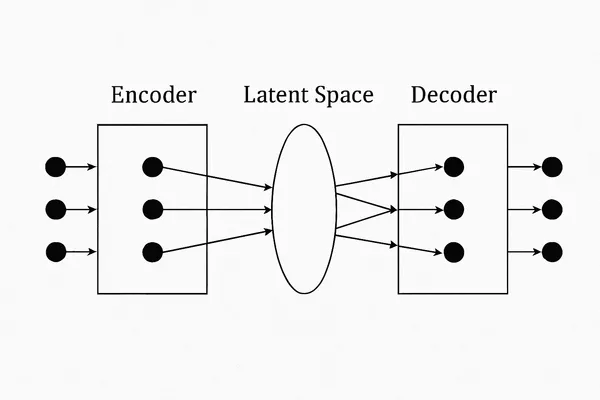

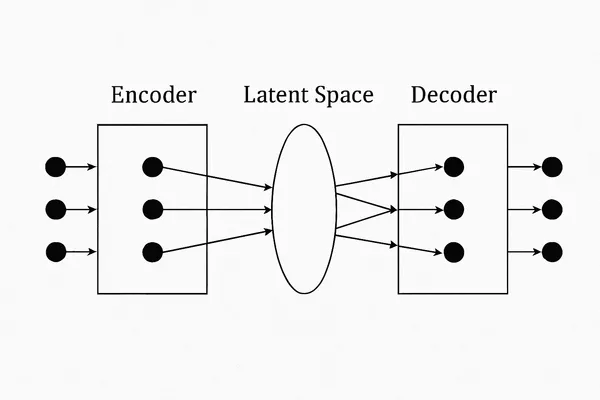

AI This article explores the core components of Encoder-Decoder models, their applications in sequence-to-sequence tasks, and how they enable powerful deep learning solutions for text translation, summarization, and more.

This article explores the core components of Encoder-Decoder models, their applications in sequence-to-sequence tasks, and how they enable powerful deep learning solutions for text translation, summarization, and more.

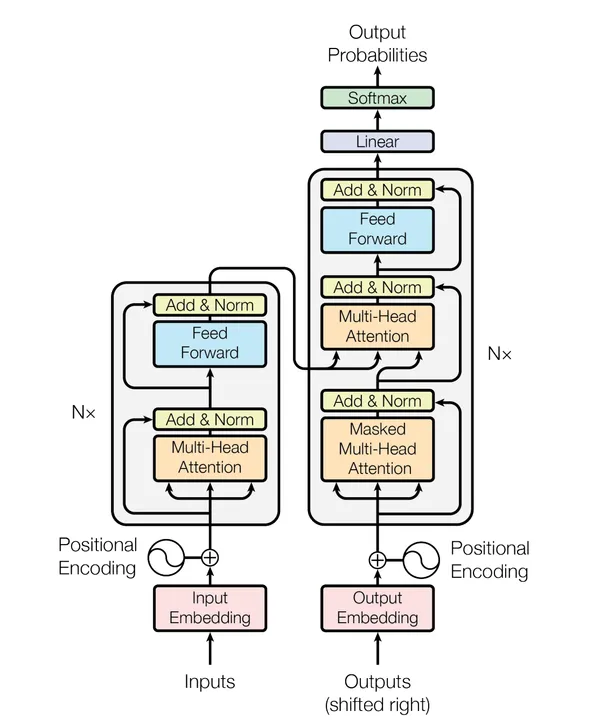

A comprehensive guide to Transformer architecture, from its fundamental components to cutting-edge applications. Learn about self-attention, positional encodings, and how Transformers are reshaping AI across multiple domains.