Published

- 1 min read

Failed Implementation: 'Leave No Context Behind, Infinite Context Transformers'

Overview

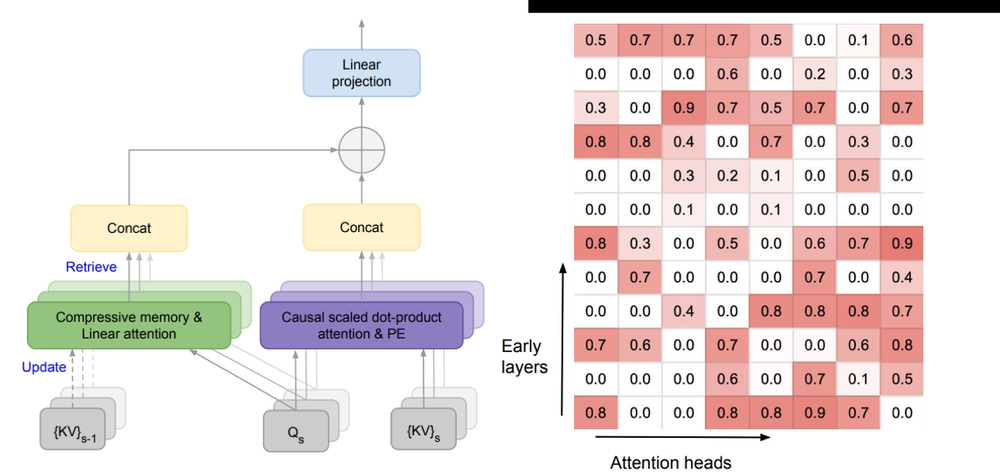

This write-up documents an exploratory effort to implement the Infini-attention mechanism from the paper “Leave No Context Behind: Efficient Infinite Context Transformers with Infini-attention.” While the attempt did not yield a working prototype, the process highlighted key design difficulties and implementation bottlenecks in adapting attention architectures to unbounded contexts.

Source Code

You can find the code and notes from the implementation attempt on GitHub:

https://github.com/Genereux-akotenou/transformers

Coming Soon

A follow-up article will dive into lessons learned, including challenges with chunkwise recurrent processing, memory state tracking, and stability in decoder-only models.